Hoek–Brown failure criterion

The Hoek–Brown failure criterion is an empirical stress surface that is used in rock mechanics to predict the failure of rock[1][2]. The original version of the Hoek–Brown criterion was developed by Evert Hoek and E. T. Brown in 1980 for the design of underground excavations[3]. In 1988, the criterion was extended for applicability to slope stability and surface excavation problems[4]. An update of the criterion was presented in 2002 that included improvements in the correlation between the model parameters and the geological strength index (GSI)[5].

The basic idea of the Hoek–Brown criterion was to start with the properties intact rock and to add factors to reduce those properties because of the existence of joints in the rock[4]. Although a similar criterion for concrete had been developed in 1936, the significant tool that the Hoek–Brown criterion gave design engineers was a quantification of the relation between the stress state and Bieniawski's rock mass rating (RMR)[6]. The Hoek–Brown failure criterion is used widely in mining engineering design.

Contents |

The original Hoek–Brown criterion

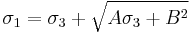

The Hoek–Brown criterion has the form[2]

where  is the effective maximum principal stress,

is the effective maximum principal stress,  is the effective minimum principal stress, and

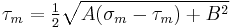

is the effective minimum principal stress, and  are materials constants. In terms of the maximum normal stress (

are materials constants. In terms of the maximum normal stress ( ) and maximum shear stress (

) and maximum shear stress ( )

)

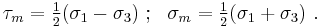

where

We can convert the above relation into a form similar to the Mohr–Coulomb failure criterion by solving for  to get

to get

The material constants  are related to the unconfined compressive (

are related to the unconfined compressive ( ) and tensile strengths (

) and tensile strengths ( ) by[2]

) by[2]

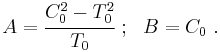

Symmetry issue

If we set  in the above equation, we get the pure shear Hoek–Brown criterion:

in the above equation, we get the pure shear Hoek–Brown criterion:

The two values of  are unsymmetric with respect to the

are unsymmetric with respect to the  axis in the

axis in the  -plane. This feature of the Hoek–Brown criterion appears unphysical[2] and care must be exercised when using this criterion in numerical simulations.

-plane. This feature of the Hoek–Brown criterion appears unphysical[2] and care must be exercised when using this criterion in numerical simulations.

References

- ^ Hoek E. and Brown E.T. (1980). Underground Excavations in Rock. London: Institution of Mining and Metallurgy.

- ^ a b c d Pariseau, W. G. (2009). Design Analysis in Rock Mechanics. Taylor and Francis. pp. 499.

- ^ Hoek E. and Brown E.T. (1980). "Empirical strength criterion for rock masses". J. Geotechnical Engineering Division ASCE: 1013–1025.

- ^ a b Hoek, E. and Brown (1988). "The Hoek-Brown failure criterion - a 1988 update". Proc. 15th Canadian Rock Mech. Symp.: 31–38. http://www.rocscience.com/library/pdf/RL_2.pdf.

- ^ Hoek E, Carranza-Torres CT, Corkum B (2002). "Hoek-Brown failure criterion-2002 edition". Proceedings of the fifth North American rock mechanics symposium 1: 267–273. http://www.rockeng.utoronto.ca/downloads/rocdata/webhelp/pdf_files/theory/Hoek-Brown_Failure_Criterion-2002_Edition.pdf.

- ^ Bieniawski, Z. T. (1976). Z. T. Bieniawski. ed. "Rock mass classification in rock engineering". Proc. Symposium on Exploration for Rock Engineering (Balkema, Cape Town): 97–106.

![\tau_m = \tfrac{1}{8}\left[-A \pm \sqrt{A^2 %2B 4(A\sigma_m %2B B^2)}\right]](/2012-wikipedia_en_all_nopic_01_2012/I/c6b0432def350b07202d26765030a8b2.png)

![\tau_m = \tfrac{1}{8}\left[-A \pm \sqrt{A^2 %2B 4B^2}\right]](/2012-wikipedia_en_all_nopic_01_2012/I/9e3458c1b22581e77c141f30161b6623.png)